Once in a while I’m going to post articles in English to share them with a broader community. This is a serious case and there’s no information yet to find on the internet.

When good things cause bad effects

VMware vSphere API for Array Integration (VAAI) is an elegant feature introduced in vSphere 4.1 to offload disk related workloads from the host to the storage array. A lot of recent storage models support VAAI while older models don’t have this feature implemented.

After upgrading from vSphere 4.1 to vSphere 5.0 one of our customers experienced extremely sluggish behaviour during svMotion and while creating eager zeroed disks. ESXi servers and storage used to work well before the upgrade and did not have any storage related problems.

Environment

Two (partly) mirrored Fujitsu Eternus DX90 S1 connected to redundant FC fabrics (Brocade 300), standing in different fire protection zones. Each with two active storage controller modules (cm0 and cm1). File system VMFS3. Disks SAS 15k.

Four Primergy RX300 S6, two of them in each fire protection zone.

To make things clear: I know Eternus DX90 S1 does not support VAAI, so we never spent a second thinking about getting any trouble from that side. It is enabled in vSphere5 by default and IMHO ESXi should find out if the connected storage does understand VAAI commands or not.

The load paradox

When our customer created a new VM with an eager zeroed disk, latencies on almost every LUN virtually exploded (up to 800 ms and more). And not only on the same RAID group, but also on unrelated RAID groups. We did a lot of testing and witnessed every time the same phenomenon. The storage controller’s load (LUN owner) went to 100% while involved disks worked at less than 20% of their maximum I/O capacity. Normally you’d expect the disks to be the bottleneck, but in this case it was the other way round.

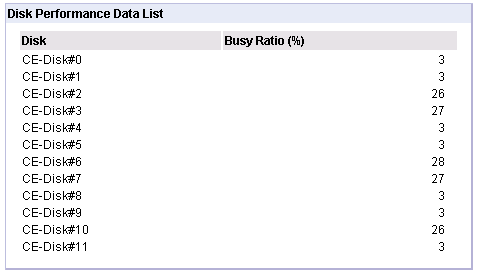

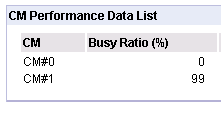

Controller module cm1 is under full load while disks aren’t. Disks# 2,3,6,7,10 are owned by cm1 and are directly involved by the creation of the eager zeroed vmdk disk.

Controller module cm1 is under full load while disks aren’t. Disks# 2,3,6,7,10 are owned by cm1 and are directly involved by the creation of the eager zeroed vmdk disk.

We could exclude any FC pathing effects, because we had the same phenomenon on every host with either local or remote fibre connection to the storage unit. We could also exclude any mirror effect, because it happened on non-mirrored and mirrored LUNs equally. Even setting the mirror to “pause” did not change anything.

Talking to Fujitsu support we were told that there might be some VAAI issues on Eternus DX 90 S2 systems. But there was no information regarding S1 series yet.

Disable VAAI

We decided to disable VAAI on all ESXi hosts, but we didn’t expect any boost in performance. We just wanted to switch off an unused feature. In one of my recent blogposts I explained how to disable VAAI by using either the GUI, esxCLI or PowerCLI. Basically you have to set these advanced parameters to 0 (zero).

- DataMover – HardwareAcceleratedMove (default 1)

- DataMover – HardwareAcceleratedInit (default 1)

- VMFS3 – HardwareAcceleratedLocking (default 1)

Disclaimer

We have informed Fujitsu storage support (FTS in Germany) about the issue. They have been very helpful in isolating the problem. Please get in contact with your domestic storage support staff before disabling VAAI.

Three zeros can make a big difference

After disabling VAAI on all ESX hosts, all negative effects totally vanished. We created an eager zeroed disk exactly the way we’ve done it before (good scientific practice) and kept monitoring disks, storage controller and LUN latency. This time the load on the storage controller increased up to 50% (good) and involved disks became busy. That’s what you’d expect to see. The whole process was fast as usual again with no negative influence on other RAID sets.

ESXi hosts seemed to have flooded the storage controllers with VAAI commands which the storage didn’t understand. Therefore spending more time dealing with unknown or illegal commands than with I/O.

| time to complete (VAAI on) | time to complete (VAAI off) | |

| Eternus DX90 S1 | 180 s | 14 s |

| Fibrecat SX100 | 14 s | 14 s |

Other affected systems

Systems I have checked so far that show the same problem:

- Eternus DX90 S1 (FC)

- Eternus DX80 S1 (FC)

- Eternus DX60 S1 (FC)

This seems to be unrelated to firmware versions. It also happens (by time of writing) with latest firmware V10L66.

Update

Fujitsu support (unofficially) announced a firmware release for DX60/80/90 S1 to fix the issue. It is likely to be available in October.

Update

The Issue has been fixed with Firmware V10L68.