VMware annually grants the vExpert award to individuals who have made a special contribution to the VMware community. This can be either through publications, presentations, blogs, or work in the VMware User Group (VMUG). I am pleased to be part of the vExpert community for the seventh year in a row in 2023.

In addition to the common vExpert, there are subprograms for specialized application branches.

- Application Modernization

- AVI

- Cloud Management

- Cloud Provider

- End User Computing (EUC)

- Multi-Cloud

- NSX

- vExpertPro

- Security

I applied for the three sub-programs vExpertPro, Application-Modernization and Multi-Cloud and was accepted in all three categories.

vExpertPro

The mission of the vExpert PRO program is to create a global network of vExperts willing to find new vExperts in their local communities, support them, and mentor them on their way to becoming vExperts.

For this purpose, vExpertPro exist in many regions of the world. I have been a member of this group since 2021 myself and have been confirmed for another year.

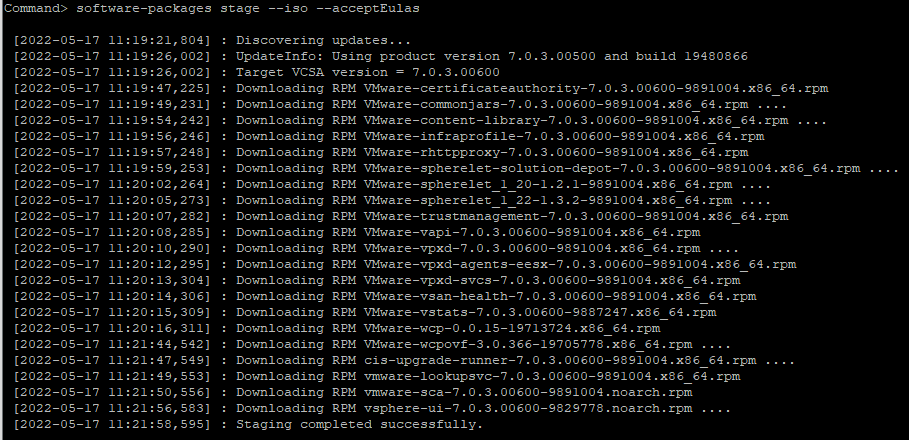

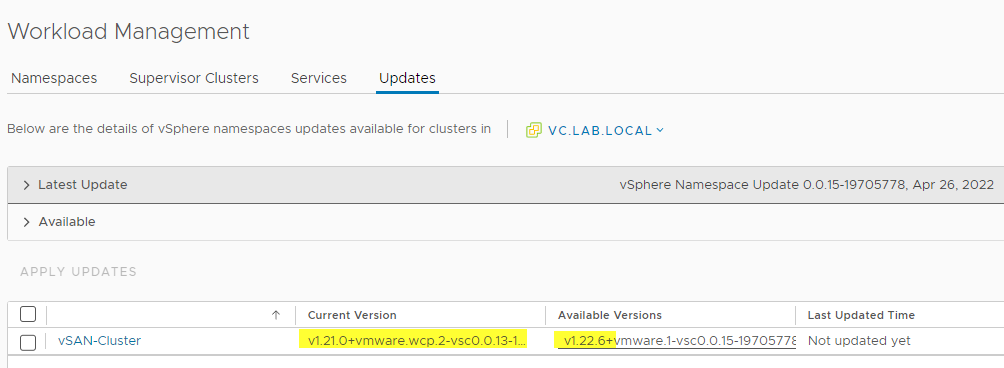

vExpert Multi-Cloud

The multi-cloud area covers large parts of the VMware Compute portfolio. The term cloud includes not only the public cloud, but also local data centers (private cloud) and combinations of both approaches (hybrid cloud). This includes numerous products such as vSphere, vSAN, VMware Cloud Foundation (VCF), Aria, VMware Cloud on AWS, Site Recovery Manager (SRM) or vCloud Director (VCD).

I submitted my first application for this relatively new vExpert path in 2023 and was accepted. Many thanks to the business unit for the decision.

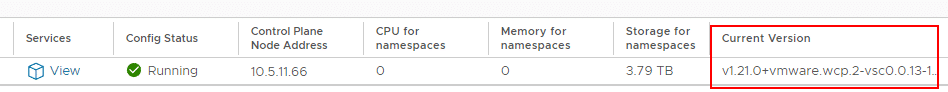

vExpert Application Modernization

Application Modernization is all about Tanzu and Kubernetes, as well as the ecosystem around these technologies. The background was described in great detail by Keith Lee in his article “Announcing the VMware Application Modernization vExpert Program 2023“.