I usually get a lot of questions during trainings or in the process of vSAN designs. People ask me why there is a requirement for 30% of slack space in a vSAN cluster. If you look at it without going deeper, it looks like a waste of (expensive) resources. Especially with all-flash clusters it’s a strong cost factor. Often this slack space is mistaken as growth reserve. But that’s wrong. By no means it’s a reserve for future growth. On the contrary – it is a short term allocation space, needed by the vSAN cluster for rearrangements during storage policy changes.

Continue reading “Why does a vSAN cluster need slack space?”Unclaim vSAN Disks in ESXi Host

While playing with the latest ESXi / vSAN beta, I ran into a problem. I was about to deploy a vCenter Server Appliance (VCSA) onto a single ESXi host, that was designated to become a vSAN Cluster. During initial configuration of vCenter something stalled. Needless to say that it’s been a DNS problem. 😉

That part of vCenter/vSAN deployment is delicate. If something goes wrong here, you have to start over again and deploy a new vCenter appliance. When you run the installer a second time (after you have fixed your DNS issues) you won’t see any disk devices to be claimed by vSAN. Where have they gone? Well, actually they are still there, but during the first deployment effort they were claimed by vSAN and now form a vSAN datastore. But a greenfield vSAN deployment on a first host needs disks that do not contain any vSAN or VMFS datastore.

How to release disks?

Usually you can remove Disk Groups in vCenter. But we don’t have a vCenter at this point. Looks like a chicken-and-egg problem. But we do have a host and a shell and esxcli. Start SSH service on the host and connect to the shell (e.g. Putty).

Continue reading “Unclaim vSAN Disks in ESXi Host”Measure vSAN performance with HCIBench

One of the basic ideas behind the introduction of hyperconvergent systems such as vSAN was high data throughput with the lowest possible latencies. This is achieved by short paths to the storage medium (without SAN fabric infrastructure) and the use of fast flash media as cache. If one wants to push such a system to its limits, it requires standardized tests that put considerable load on the cluster.

VMware has provided a benchmark appliance called HCIBench especially for vSAN, but also for classic server storage clusters. The Fling from the VMware lab is freely available and very easy to install.

Continue reading “Measure vSAN performance with HCIBench”vSAN Homelab Cluster Ep.2

If you’ve missed it read part 1 – Planning phase.

Unboxing

Last Tuesday a delivery notification made me happy. Hardware shipping is on its way. Now it was time to get the cabling ready. I can’t stand Gordian knots of power cords and patch cables. I like to keep them properly tied together by velcro tape. To keep things simple, I’ve started with a non-redundant approach for vSAN traffic and LAN. Still eight patch cables that had to be labeled and bundled. Plus 4 cables for the iPMI interface. I found out later that the iPMI interface will make a fallback to the LAN interface if not connected. That’s nice. Saves me four cables and switch ports.

Host Hardware

All four hosts came ready assembled and had accomplished a burn in test. The servers are compact and have the size of a small pizza box. They’re 25,5 cm wide, 22,5 cm deep and 4,5 cm high. But before I’m going to press power-on, I need to have a look under the hood. 🙂

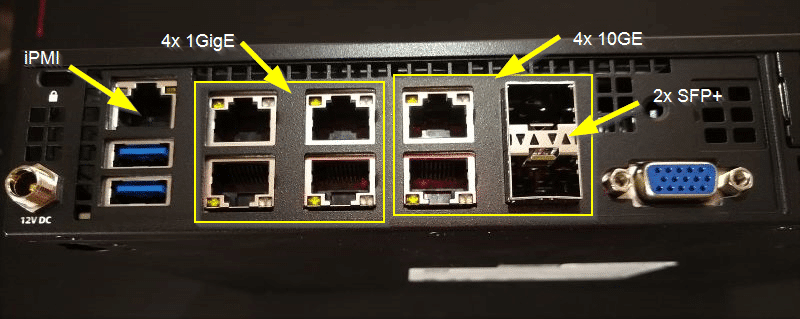

Let’s start with the rear side. As you can see in the picture, there are plenty of interfaces for such a small system. In the lower left corner there’s the 12V connector which can be fastened by a screwcap. Then there are two USB 3.0 connectors and the iPMI interface above them. The iPMI comes with console and video redirection (HTML5 or Java). No extra license needed.

Then we have 4x 1 Gbit (i350) ports and four 10 Gbit (X722) ports. Two of which are SFP+. In the lower right there’s a VGA interface. Thanks to console redirection this is not necessary. But it is good to have one in emergencies.