There have been many new releases in the first quarter of 2020. The long anticipated release of Veeam Backup & Replication version 10, we’ve been waiting for since 2017 and also the latest generation of VMware vSphere. While I had vSAN 7 beta running on my homelab cluster before GA, I’ve worked with Veeam Backup 10 only in customer projects. There’s unfortunately no room for playing with new features unless the customer requests it. One of the new features of Veeam v10 is the ability to use Linux proxies and repositories. With XFS filesystem on the repository you can use the fast clone feature which is similar to ReFS on Windows.

In this tutorial I will show how to:

- Deploy and size the Veeam server

- Show base configuration to integrate vCenter

- Build, configure and deploy a Linux proxy and its integration into backup infrastructure

- Build, configure and deploy a Linux XFS repository

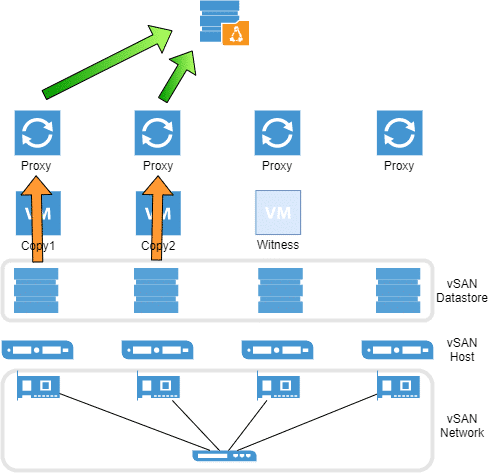

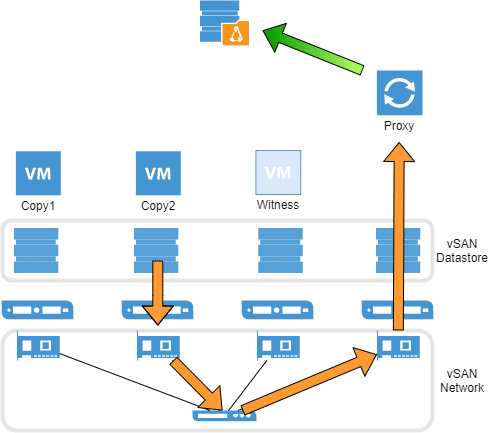

Using Veeam Backup on a vSAN Cluster has special design requirements. There’s no direct SAN backup on VMware vSAN because there’s neither a SAN, nor a fabric and nor HBAs. There are only two backup methods available: Network Mode (nbd) and Virtual Appliance Mode (hotadd). The latter is recommended for vSAN, but you should deploy one proxy per host to avoid unnecessary traffic on the vSAN interfaces. Hotadd also utilizes Veeam Advanced Data Fetcher (ADF).

Right: One proxy appliance per vSAN node; Direct data transfer without impact on vSAN network.

Talking about licenses: Having Linux proxies on each host will reduce the cost of Windows licensing. One more reason to play around with this new feature. A Veeam license will be required too, but as a vExpert I can get a NFR (not for resale) license which is valid for one year. Just one of the advantages of being a vExpert. 🙂

Let the games begin. We’ll need a Veeam server that holds the job database and the main application. The proxy and repository role will be kept on individual (Linux) servers.

Continue reading “Veeam Backup v10 on vSAN 7.0”