VMware vSAN was developed about 10 years ago. The year was 2012, when magnetic disks were predominantly used for data storage and flash media was practically worth its weight in gold. It was during this time that the idea behind vSAN was born. Hybrid data storage with spinning disks for bulk data and flash media as cache. Flash devices at this time used the same interfaces and protocols as magnetic disks. As a result, they were not able to unfold their full potential. There was always the bottleneck of the interface.

Today – a more than 10 years later – we have more advanced flash storage with high data density and powerful protocols such as NVMe. The price per TB for flash is now on par with magnetic SAS disks, which has practically replaced magnetic disks. In addition, there are higher possible bandwidths in the network, higher core density in the CPU, and completely new requirements such as ML/AI or containers. The time has come for a new type of vSAN data storage that can fully leverage the potential of new storage technologies.

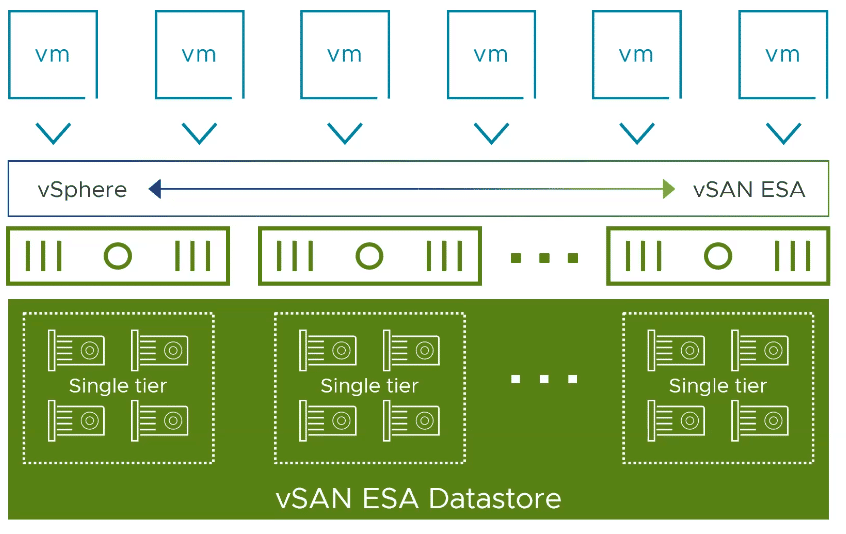

vSAN Express Storage Architecture (ESA)

Putting it in a nutshell: The vSAN ESA architecture is an optional data storage architecture. The traditional disk group model will continue to exist – even under vSAN 8.

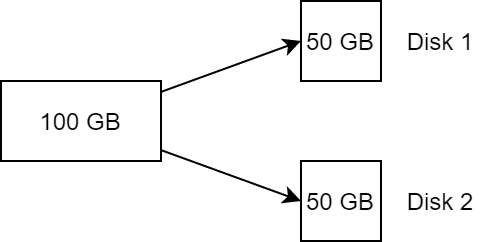

VMware vSAN ESA is a flexible single-tier architecture. This means that it does not require disk groups and no longer distinguishes between cache and capacity layers. It is optimized for the use of modern NVMe flash storage. All storage devices of a host are gathered in a storage pool.

There is no upgrade path from the diskgroup model to ESA. Thus, the new architecture can only be used in greenfield deployments. The vSAN nodes must be explicitly qualified for this. There will be dedicated vSAN ReadyNodes for ESA.

Continue reading “VMware vSAN 8 – vSAN on steroids”