VMware will be sunsetting the NSX native load balancers. Customers should be migrating to the currently supported NSX Advanced Load Balancer (Avi) which simplifies operations today while getting you ready for your multi-cloud and container strategies tomorrow. Avi works across all environments beyond the NSX framework, expanding use cases to public cloud, containers and app security while adding capabilities for GSLB, WAF and analytics. A migration tool will be available to make the migration of your existing configuration to the current technology easy and painless.

Heads up! Watch your NIC order when adding more hosts to VCF

VMware Cloud Foundation is a unified SDDC platform for the hybrid cloud. It is based on VMware’s compute, storage, and network virtualization.

VCF can be expanded with more workload domains by adding further hosts, or it can be stretched over two availability zones (AZ). The expansion is initiated by and under control of the SDDC-Manager. The procedure is fairly straightforward and SDDC-Manger does all the configuration tasks in the background, i.e. forming vSAN clusters, networks, kernel ports, vCenters and NSX control planes.

- setup hosts with ESXi base image

- confige a management IP address

- set root credentials

- configure DNS and NTP

- import new hosts into SDDC-Manager

- deploy new WLD

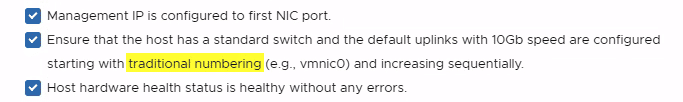

There is a pitfall that can be easily overseen: The order of the new host’s NICs. Before we can import new hosts, we’ll get to see a checklist about the host requirements. The hosts need to have two NICs with at least 10 GBit.

While reading the list there’s a little detail that is often overlooked. Traditional numbering means that both NICs must have numbers vmnic0 and vmnic1. Unfortunately this seems to be hard coded and cannot be changed (as of current version 4.2). To make matters worse, many server systems have onboard 1 GBit network adapters. There’s a KB article that explains how VMware ESXi determines the order in which names are assigned to network devices. It’ll start with onboard NICs and then continues with PCIe cards. As a result you’ll might end up with two 1 GBit onboard NICs as vmnic0 and vmnic1. In this case the bringup of the VCF expansion will fail.

While you can choose NICs during initial VCF bringup, this is not possible during expansion and this time there’s no such thing as a bringup sheet. You can’t select more than two NICs either when using SDDC-Manager. In that case you’ll need to use API-calls.

Workaround

Currently there’s no other way than to disable onboard NICs in the system BIOS. If your desired NICs still show a higher number you’ll need to put the PCIe card into a lane with lower number.

Basic Setup vRNI 5.0

VMware vRealize Network Insight (vRNI) – a.k.a “Verni” – version 5.0 was released in late 2019 and can be obtained from the VMware vRNI download page.

I will briefly describe the setup process here. First of all, the approx. 6 GB image file of the appliance must be loaded from VMware Downloads (login required). The appliance needs to be deployed into an existing cluster via the “Deploy OVF Template” wizard of vSphere-Client.

Deployment of the Platform Appliance (Collector)

There’s some naming confusion. The collector appliance is now called “platform” appliance. This makes it a bit difficult to find if you search for the collector in the download portal. 😉

Continue reading “Basic Setup vRNI 5.0”vSwitch rescue from the CLI

Virtual Distributed Switches have many advantages over standard switches. Because you have a centralized configuration over all hosts they’re less error prone to configuration errors than standard switches. Call me old fashioned but I prefer to have at least the hosts management interface on a standard switch. In case something bad happens, you can still access the host and make changes on the interface.

Recently a customers host had failed. After restoring configuration, for some reason vmnics were swapped between vdSwitches and it wasn’t possible to configure that host neither with hostclient nor with vCenter. The customer was short on vmnics in the past and has configured Management Network on a distributed Portgroup on a distributed vSwitch. This is legal and usually not a problem. In that special case it was a problem. I was literally locked out of the host. Reassigning NICs in the DCUI didn’t work, because they were all claimed by Distributed-vSwitches thus not available for standard switches.

What now ?

There’s help, but you need to access the CLI of DCUI.

Login to DCUI console, select “Troubleshooting Options” in the main menu.