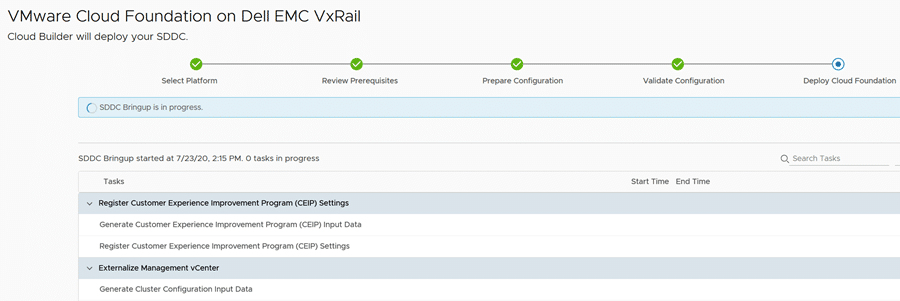

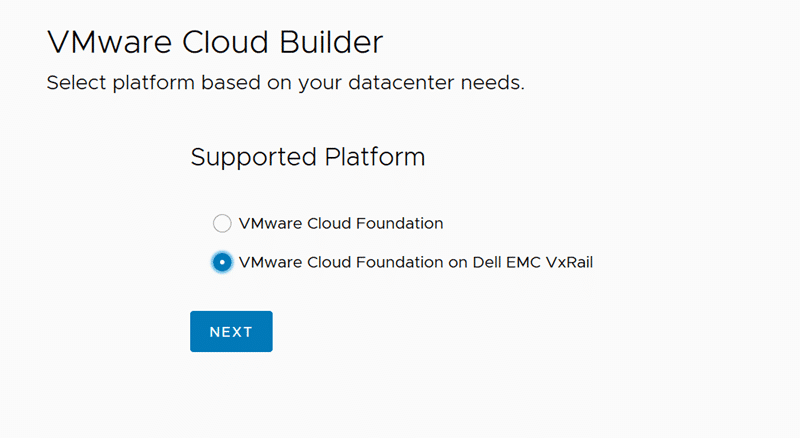

As part of a VMware Cloud Foundation (VCF) greenfield deployment, the Cloud Builder appliance is used for one-time use. It automatically deploys the management infrastructure of a VCF cluster and can be discarded afterwards.

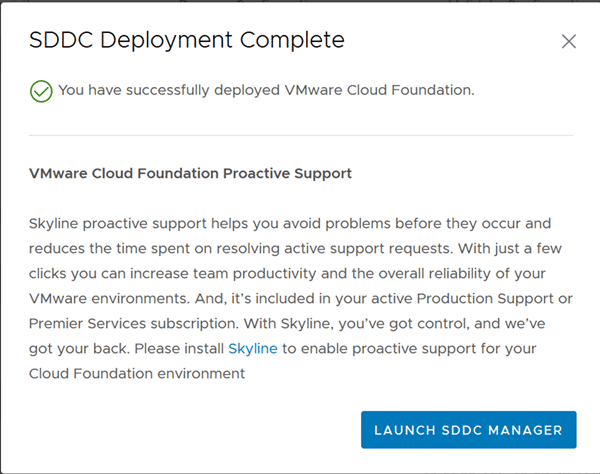

The ideal situation is that the previously created workbook or JSON is processed and the cluster is successfully created.

In the UI of the Cloud Builder, however, there is no option to reset the wizard and restart it from zero. For example, when requirements have changed and a new or adapted workbook is to be used. Or you want to use the appliance for another rollout. In this case, the appliance would have to be completely redeployed. Any errors in the JSON file cannot be corrected this way either.

However, there is a trick to reset the Cloud Builder to zero and feed it with a modified JSON file. This is thanks to an API call that may have been ‘forgotten’ during development. In order to do so, we have to log in to the console of the Cloud Builder as user root.

[Optional] It may be easier to grant the root user temporary SSH access. Log in to the VM console as root and edit the sshd configuration.

sudo vi /etc/ssh/sshd_config

Browse the sshd_config and look for the line PermitRootLogin no. Disable the line by putting a # in front of it.

# PermitRootLogin no

Save the configuration and open a SSH session as user root. We now can execute an API call as user root.

curl -X GET http://localhost:9080/bringup-app/bringup/sddcs/test/deleteAll

Login to the web UI of the Cloud Builder appliance. You now can start from the beginning.

Links

VMware Cloud Platform Tech Zone – Re-use Cloud Builder for Another Deployment