Backing up and restoring an ESXi host configuration is a standard procedure that can be used when performing maintenance on the host. Not only host name, IP address and passwords are backed up, but also NIC and vSwitch configuration, Object ID and many other properties. Even after a complete reinstallation of a host, it can recover all the properties of the original installation.

Recently I wanted to reformat the bootdisk of a host in my homelab and had to fresh install ESXi for this. The reboot with the clean installation worked fine and the host got a new IP via DHCP.

Now the original configuration was to be restored via PowerCLI. To do this, first put the host into maintenance mode.

Set-VMhost -VMhost <Host-IP> -State "Maintenance"

Now the host configuration can be retored.

Set-VMHostFirmware -VMHost <Host-IP> -Restore -Sourcepath <Pfad_zum_Konfigfile>

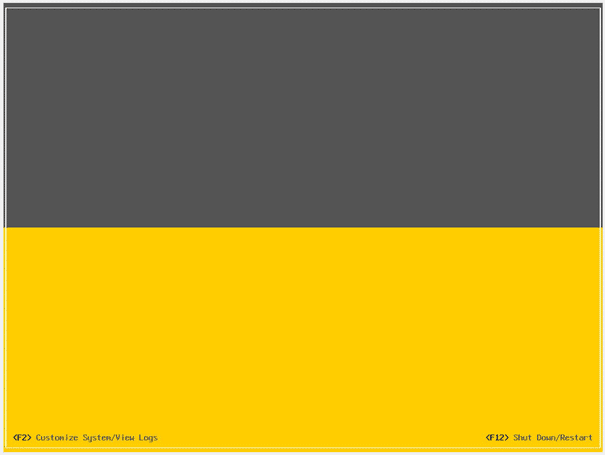

The command prompts for a root login and then automatically reboots. At the end of the boot process, an empty DCUI was welcoming me.

I haven’t seen this before. I was able to log in (with the original password), but all network connections were gone. The management network configuration was also not available for selection (grayed out). The host was both blind and deaf.

Continue reading “ESXi Configuration Restore fails with blank DCUI”