What can be done if the production vCenter Server appliance is damaged and you need to migrate a vSAN cluster to a new vCenter appliance?

In this post, I will show how to migrate a running vSAN cluster from one vCenter instance to a new vCenter under full load.

Anyone who works with vSAN will have a sinking feeling in their guts thinking about this. Why would one do such a thing? Wouldn’t it be better to put the cluster into maintenance mode? – In theory, yes. In practice, however, we repeatedly encounter constraints that do not allow a maintenance window in the near future.

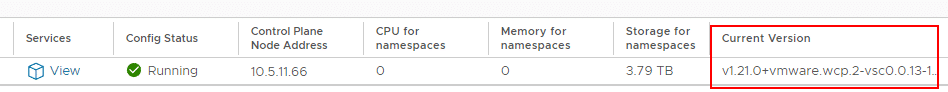

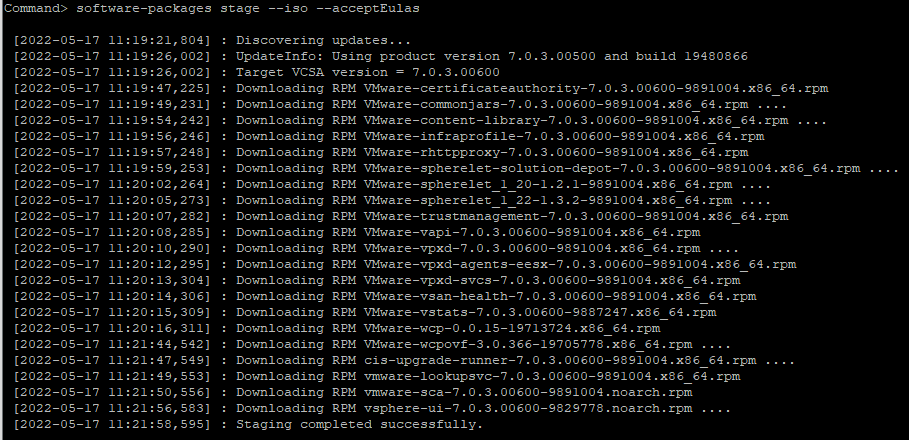

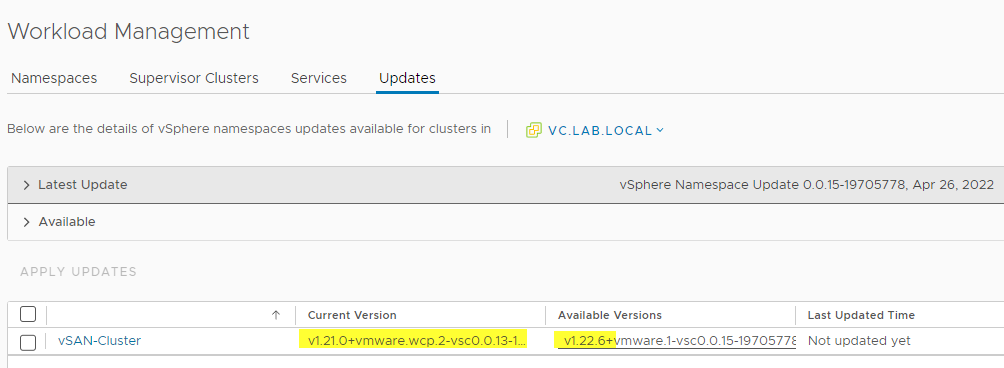

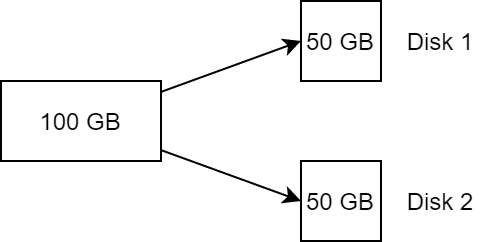

Normally, vCenter Server appliances are solid and low-maintenance units. Either they work, or they are completely destroyed. In the latter case, a new appliance could be deployed and a configuration restore could be applied from the backup. None of this applied to a recent project. VCSA 6.7 was still working halfway, but key vSAN functionality was no longer operational in the UI. An initial idea to fix the problem with an upgrade to vCenter v7 and thus to a new appliance proved unsuccessful. Cross-vCenter migration of VMs (XVM) to a new vSAN cluster was also not possible, firstly because this feature was only available starting with version 7.0 update 1c, and secondly because only two new replacement hosts were available. Too few for a new vSAN cluster. To make things worse, the source cluster was also at its capacity limit.

There was only one possible way out: stabilize the cluster and transfer it to a new vCenter under full load.

There is an old, but still valuable post by William Lam on this topic. With this, and the VMware KB 2151610 article, I was able to work out a strategy that I would like to briefly outline here.

The process actually works because, once set up and configured, a vSAN cluster can operate autonomously from the vCenter. The vCenter is only needed for purposes of monitoring and configuration changes.

Continue reading “vSAN Cluster Live-Migration to new vCenter instance”