How to troubleshoot vMotion issues

Troubleshooting vMotion issues is in most cases a matter of networking issues. I will demonstrate in this case how to trace down the problem and how to find possible culprits.

What’s the problem?

Initiating a host vMotion between esx1 and esx2 passes all pre-checks, but then fails at 21% progress.

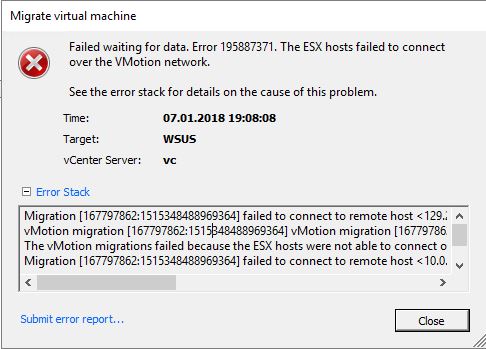

Migrate virtual machine:Failed waiting for data. Error 195887371. The ESX hosts failed to connect over the VMotion network.

See the error stack for details on the cause of this problem. Time: 07.01.2018 19:08:08 Target: WSUS vCenter Server: vc Error Stack Migration [167797862:1515348488969364] failed to connect to remote host <192.168.45.246> from host <10.0.100.102>: Timeout. vMotion migration [167797862:1515348488969364] vMotion migration [167797862:1515348488969364] stream thread failed to connect to the remote host <192.168.45.246>: The ESX hosts failed to connect over the VMotion network The vMotion migrations failed because the ESX hosts were not able to connect over the vMotion network. Check the vMotion network settings and physical network configuration. Migration [167797862:1515348488969364] failed to connect to remote host <10.0.100.102> from host <192.168.45.246>: Timeout. vMotion migration [167797862:1515348488969364] failed to create a connection with remote host <10.0.100.102>: The ESX hosts failed to connect over the VMotion network Failed waiting for data. Error 195887371. The ESX hosts failed to connect over the VMotion network.

Check VLAN

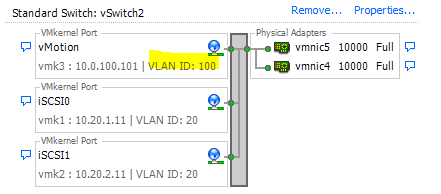

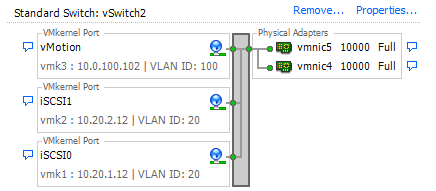

My first step was to check the VLAN settings of my vmkernel adapter.

Both vmkernel ports on each host were set to VLAN 100.

All Switchports were tagged members of VLAN 20 (iSCSI) and VLAN 100 (vMotion).

vMotion enabled?

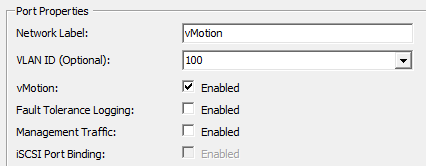

One of the simplest causes is not enabling the kernelport for vMotion. It may sound stupid, but it happens quite often. 🙂

But as you can see in the picture above, it is vMotion enabled (and on the other host too).

Check connectivitiy

Next step is usually to send a vmkping over the adapter in question. We’ll send a PING over vmk3 (vMotion) to its corresponding partner on esx2 with IP 10.0.100.102. Parameters -4 will force the ping over IPv4 and -I makes sure to use interface vmk3 (vMotion).

[root@esx1:~] vmkping -4 -v -I vmk3 10.0.100.102 PING 10.0.100.102 (10.0.100.102): 56 data bytes 64 bytes from 10.0.100.102: icmp_seq=0 ttl=64 time=0.353 ms 64 bytes from 10.0.100.102: icmp_seq=1 ttl=64 time=0.502 ms 64 bytes from 10.0.100.102: icmp_seq=2 ttl=64 time=0.456 ms --- 10.0.100.102 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.353/0.437/0.502 ms

This is a very important test. It shows us that there is connectivity between both vMotion kerneladapters.

What about MTU?

Jumbo frames have a Maximum Transmission Unit (MTU) set to 9000. A common source of connectivity issues. You need to make sure that all interfaces along the path have MTU 9000 set. We can check it with a customized PING command. Option -s will send a 8972 byte frame. Option -d stands for “do not fragment”. So we will send a 8972 byte frame to the kernelport of esx2. If somewhere on the path a standard MTU of 1500 is set, the ping will fail.

[root@esx1:~] vmkping -4 -d -s 8972 -I vmk3 10.0.100.102 PING 10.0.100.102 (10.0.100.102): 8972 data bytes 8980 bytes from 10.0.100.102: icmp_seq=0 ttl=64 time=0.601 ms 8980 bytes from 10.0.100.102: icmp_seq=1 ttl=64 time=0.449 ms 8980 bytes from 10.0.100.102: icmp_seq=2 ttl=64 time=0.447 ms --- 10.0.100.102 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.447/0.499/0.601 ms

We can see from the result, that there’s no problem with the MTU. All packets were properly transmitted.

Other vMotion Networks?

At this point. Everything should be fine – but obviously it isn’t.

What if there is another vMotion enabled kernelport in a different network? We might check every kernelport manually, but ist is smarter with a script.

Open PowerCLI and connect to your vCenter

Connect-VIserver vc

Enter Credentials and execute this one-liner:

Get-VMHostNetworkAdapter -VMKernel | select VMhost, Name, IP, SubnetMask, PortGroupName, vMotionEnabled, mtu | where {$_.VMotionEnabled}

It will check all kernelport adapters on all hosts where VMotion is enabled.

VMHost : esx2.xxxx.xxxx.de Name : vmk3 IP : 10.0.100.102 SubnetMask : 255.255.255.0 PortGroupName : vMotion VMotionEnabled : True Mtu : 9000 VMHost : esx1.xxxx.xxxx.de Name : vmk0 IP : 192.168.45.246 SubnetMask : 255.255.255.0 PortGroupName : Management Network VMotionEnabled : True Mtu : 1500 VMHost : esx1.xxxx.xxxx.de Name : vmk3 IP : 10.0.100.101 SubnetMask : 255.255.255.0 PortGroupName : vMotion VMotionEnabled : True Mtu : 9000

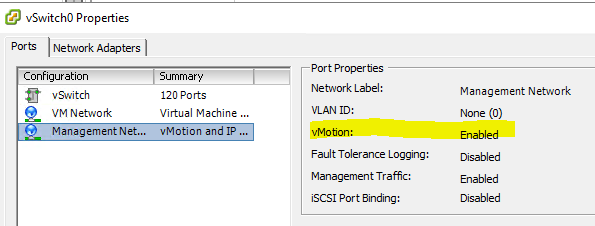

Now here’s the problem: There is still a vMotion enabled Management Network Adapter in subnet 192.168.45.0 on esx1.

After migration vMotion to a new network, it’s been forgotten to uncheck vMotion on the old Management Network Portgroup. Packets sent over vmk0 on esx1 could not reach a corresponding kernelport on esx2 and the whole process failed.

You rock! this article saved my day. Brought in 2 new nodes and couldn’t vmotion to one of them. I was banging my head, and the last step about vmotion being enabled on multiple kernel adapters was the trick.